Quick note: AI — is it just verbal / symbolic reasoning?

"Christopher?"

What it was

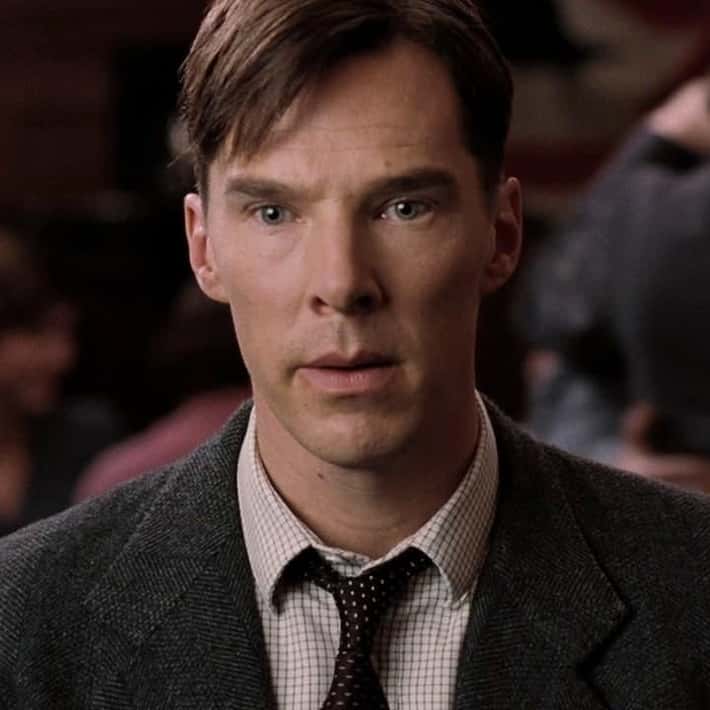

Imitation game — Alan Turing’s Computing Machinery and Intelligence (1950), also the title of one of my favorite movies, "The Imitation Game" (2014) starring Benedict Cumberbatch as Alan Turing shows Turing trying to cope with the loss of his friend christopher so much so that he names the machine he built to break the enigma code after him. He refers to the machine as "christopher" in the movie almost giving the machine a personality sort of like how we humans do, the only difference being that christopher is a machine that has an objective to decrypt enigma radio messages given a message and a set of possible keys. This has been the traditional sense of what intelligent machines were. I remember in grad school (around 2017 and earlier), “AI” referred mainly to logic-based problem-solving — algorithms like greedy search designed to mimic structured human decision-making.

Artificial Intelligence was often defined as the capacity of a computational system to optimize an objective function within defined constraints and goals.

In other words, intelligence was framed as the ability to move through a space of possibilities and continuously adjust its actions to reach an optimum.

For example, suppose we define a simple function:

f(x) = (x - 3)²The “space” here is one-dimensional — all possible values of x. The goal is to find the value of x that minimizes f(x). The optimal solution is clearly x = 3, where f(x) = 0.

A gradient descent algorithm starts with a random guess for x and repeatedly updates it by moving opposite to the gradient (the slope of the function):

x ← x - η * (df/dx)where η (eta) is the learning rate — a small step size that controls how quickly the system moves toward the minimum.

Conceptually, this process mirrors the way early AI systems “searched” for solutions: each step was an adjustment toward a better state, guided by feedback from the environment or a cost function. Another playful example is Pac-Man: the agent’s objective is to maximize the number of pellets eaten while minimizing contact with ghosts. If the contact with a ghost is inevitable, the agent has failed the objective. Its “optimization” happens in a dynamic maze, balancing good and bad outcomes until it reaches a goal state. The final goal state being all the pellets eaten.

Higher Intelligence = Better Optimization?

When two systems operate under the same inputs and constraints, the one that reaches the defined goal with greater efficiency (fewer steps, lower computation time) is deemed the more intelligent system.

Now

Today, AI has evolved to focus not only on learning and generalization but also on generating new language, images, or sounds. This is achieved through models such as Large Language Models (LLMs), which learn the underlying probability patterns of data. Instead of memorizing sentences, an LLM estimates the likelihood of each next word based on all previous words — a process known as modeling the probability distribution of language.

For example, given the phrase "The cat sat on the", the model might learn probabilities such as:

P("mat" | "The cat sat on the") = 0.82

P("floor" | "The cat sat on the") = 0.15

P("roof" | "The cat sat on the") = 0.01

The model then samples from this probability distribution to generate the most likely continuation — in this case, "mat". Through billions of such patterns learned from text, the system can produce coherent paragraphs, stories, or explanations that appear human-written.

Extending the above, when you ask an AI to “write a paragraph about the Mona Lisa,” it doesn’t copy text from memory. Instead, it generates one word at a time by predicting what word is most likely to come next, based on everything it has already written. During training, the model has seen millions of examples of how words and ideas are related — for instance, that “Mona Lisa” often appears near “Leonardo da Vinci,” “painting,” and “Renaissance.” So, when generating text, it uses those learned probabilities to form coherent sentences. For example, it might predict that “The Mona Lisa is a famous portrait painted by Leonardo da Vinci” is a likely continuation of your prompt. Each next word is chosen because it best fits the statistical pattern of language learned from data, allowing the system to create original and fluent paragraphs.

Verbal / Word Reasoning

When an AI like GPT answers a logical or reasoning question in words — say, “If John is taller than Mary, and Mary is taller than Alex, who is the shortest?” — it doesn’t truly “think” or “see” like a human. Instead, it performs pattern-based reasoning over language using the same probability mechanism it uses for writing text. During training, the model sees billions of examples of how humans reason in text: logical explanations, arguments, question–answer pairs, and if–then relationships. It learns how words, facts, and relationships co-occur in sentences — essentially encoding reasoning patterns in its internal parameters. So when it faces a new question, it: Represents the input text as vectors (embeddings) — numerical patterns capturing meaning and relationships between words. Uses layers of attention to identify relevant relationships (“taller than” → comparison). Generates the most likely next words that would complete a correct explanation, according to patterns it has seen in similar contexts. In other words, the reasoning is still fundamentally verbal — it’s manipulating symbols (words) based on learned statistical patterns, rather than engaging in abstract, non-verbal thought like humans do. In contrast, (but not relevant to the argument) Humans, for example, sometimes associate not-so-abstract concepts like "feeling good" and past sensory experiences to reason about the future that needs action. "I don't feel good about this purchase"

Words Fall Short

Large language models like GPT perform what can be called verbal reasoning — reasoning that operates entirely through sequences of words or tokens. They manipulate symbols using learned statistical patterns, predicting the next most likely token based on vast textual context. This process excels at imitation of human discourse but remains bound by the structure and granularity of language itself.

Words are discrete, linear, and lossy. They compress complex, continuous ideas into symbolic form, which makes them useful for communication but inefficient for deep reasoning. Many phenomena — physical, sensory, emotional, and mathematical — exist in a continuous space that words can only approximate.

Consider a simple signal, such as a sine wave:

x(t) = sin(2πft)To describe this verbally, one would need many words — “a smooth oscillating curve repeating once per second” — which is imprecise and long. Mathematically, however, the same wave can be represented compactly in the frequency domain:

X(f) = δ(f - f₀)In the time domain, the signal requires thousands of bits to encode, while in the frequency domain it collapses to a single point at f₀. The analogy: verbal reasoning is like the time domain — verbose, sequential, and indirect — whereas neural reasoning is like the frequency domain — compact, structural, and continuous.

Neural networks reason by learning mappings from inputs to outcomes:

y = f(Wx + b)where W represents learned weights capturing complex correlations in multidimensional space. Unlike GPT-style models that predict the next word in a sequence, a neural system operating directly on structured data can infer patterns, simulate dynamics, or predict outcomes without ever translating them into language.

Reducing all reasoning to words — sounds arranged linearly to transfer meaning from one human to another but only collectively make sense and in a particular order — is probably not a good way to think about Intelligence. Words, though powerful for expression, fall short as a universal reasoning mechanism. To reduce reasoning to symbol associations is to risk losing concise and unambiguous expression in another solution space. The right solution requires the right space. for example, Euclidean space is not always the best space to all problems.

Computers Are Much Better at Non-Verbal Reasoning

Non-verbal reasoning refers to solving problems that involve patterns, geometry, signals, or mathematical relationships rather than words or symbols. Computers are naturally suited for this kind of reasoning because they operate in continuous, quantitative spaces — the very language of numbers, vectors, and functions.

For example, when analyzing a satellite image, a computer doesn’t “describe” the scene in words. Instead, it detects gradients of color, spatial frequencies, and correlations between pixels — mathematical structures far richer than any verbal description. It can identify land, water, roads, and vegetation purely by pattern recognition, without ever turning those patterns into sentences.

Another example is protein folding: the computer predicts the three-dimensional structure of a molecule by minimizing its energy function —

E_total = Σ (bond_energy + angle_energy + van_der_Waals + electrostatics)— a non-verbal optimization problem with billions of continuous variables. Humans can reason about this conceptually, but only computers can compute it directly.

Non-verbal reasoning also appears in physics simulations, weather forecasting, and self-driving systems. A neural network controlling a drone doesn’t reason in English; it reasons in forces, vectors, and probabilities — continuously predicting how thrust, wind, and gravity interact in real time.

In short, computers think best where language falls short — in the silent, mathematical logic of the universe: gradients, waves, energies, and flows. Verbal reasoning explains; non-verbal reasoning computes.

The Danger of Over-Relying on Language Models for Intelligent Understanding

Language models simulate intelligence through words, not through comprehension. They generate plausible explanations, arguments, and stories — but those outputs are linguistic patterns, not genuine understanding. A model like GPT doesn’t “know” what it says; it only estimates what a knowledgeable human would say next.

This distinction matters because language, while expressive, is also deceptive: fluency can look like understanding. A system that can generate perfect grammar and reasoning-like statements might appear intelligent while lacking any grounding in reality. It is, in essence, verbal mimicry — coherence without cognition.

Over-relying on language-based reasoning risks a kind of intellectual feedback loop: models trained on human text generate more text, which future models are then trained on. Over time, the system becomes self-referential — optimized not for truth or discovery, but for linguistic plausibility.

This is dangerous for science, education, and culture. When insight becomes indistinguishable from eloquence, we begin to mistake verbal smoothness for depth, and statistical completion for reasoning. True understanding requires grounding — in perception, in experience, and in the physical or mathematical reality that language merely describes.

Limits of Symbolic Reasoning in True Intelligence

Going back to the original definition of intelligence — the ability of a system to optimize an objective function within defined constraints and goals — it becomes evident that symbolic or verbal reasoning does not fully capture this process. Words describe goals, but they do not interact with the underlying space in which optimization occurs.

In mathematical systems, intelligence emerges when an agent can explore a landscape — a multidimensional function surface — and iteratively improve its position toward an optimum:

xₜ₊₁ = xₜ - η ∇f(xₜ)This process requires feedback from the function’s shape — gradients, curvatures, discontinuities — all of which exist in a continuous domain. Symbolic reasoning, by contrast, operates on discrete linguistic units that lack this continuity. It can talk about optimization, but it cannot feel the function’s geometry.

As a result, purely verbal reasoning systems like large language models can simulate understanding through expression, yet they remain blind to the actual structure of the problem space. They manipulate symbols that reference goals, but they do not sense the topology, curvature, or energy landscape those goals inhabit.

In essence, verbal reasoning describes associations; true intelligence requires interaction with the underlying reality those associations point to. Side note:

PS: The number of times I used the phrase "in other words" in this article is quite telling of how much words are ambiguous.